WEST PALM BEACH, FL – Most people are familiar with Google’s regular web crawler, Googlebot, which indexes the web to populate search results. However, Google’s ecosystem relies on a variety of specialized bots, each designed to handle unique tasks that go beyond traditional web crawling. These “special-case crawlers” often operate quietly in the background, unnoticed by most website administrators, yet they play critical roles in enhancing Google’s services.

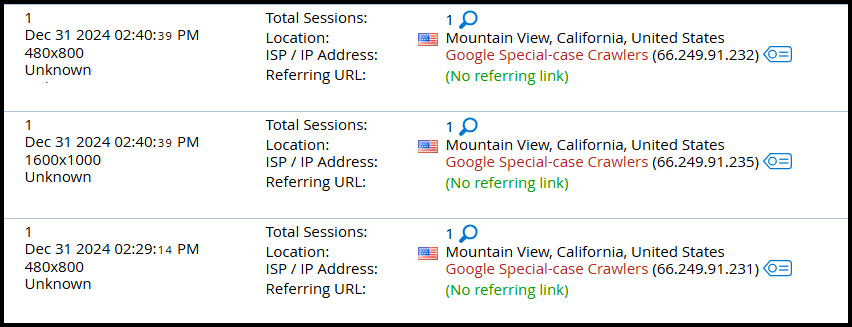

Ever see something like this rummaging through your web pages?

Google’s special-case crawlers are designed to perform tasks beyond the typical scope of regular Googlebot activities. These crawlers are tailored for specific use cases, often focusing on projects that require targeted or unique data collection. Here’s what they might be up to:

1. Testing and Validation

- Google uses special-case crawlers to test website performance, compliance with web standards, and experiment with new indexing technologies.

- For example, these crawlers might check how well a website supports mobile-first indexing or how it performs under varying load conditions.

2. Collecting Structured Data

- Special-case crawlers can be tasked with identifying and extracting structured data, such as Schema.org markup, JSON-LD, or microdata, to enhance rich results or other features in Google Search.

3. Monitoring Policy Compliance

- These crawlers may ensure that websites comply with Google’s guidelines for ads, security, and content policies.

- For example, they might look for cloaking, deceptive redirects, or malicious scripts.

4. Research and Development

- They may support R&D for new services or features by gathering data for machine learning models or testing the viability of new algorithms.

- For instance, Google might deploy a special crawler to identify trends in AMP (Accelerated Mobile Pages) adoption.

5. Content and Feature Verification

- Special-case crawlers can verify details for features like Google News, Discover, or local business listings, ensuring that the content aligns with specific requirements.

6. Auditing for Accessibility

- These crawlers might check websites for accessibility issues, ensuring compliance with standards like WCAG (Web Content Accessibility Guidelines).

7. Tracking and Mitigating Spam

- They may be deployed to identify patterns of link manipulation, spammy practices, or other violations of search quality standards.

Identifying Google’s Special-case Crawlers

These crawlers usually identify themselves in the user-agent string. Examples include:

- Google-Read-Aloud: For services that read articles aloud.

- Google-Favicon: For collecting website favicons.

- Google-Site-Verifier: For verifying site ownership in Google Search Console.

How to Manage Their Impact

- Monitor Access: Use server logs to track and identify special-case crawlers.

- Verify User-Agent Strings: Ensure these crawlers are legitimate by cross-referencing Google’s official documentation.

- Optimize for Specific Needs: If a special-case crawler is visiting your site frequently, check what it’s looking for and optimize accordingly.

- Adjust Robots.txt: Control their access if needed, but avoid blocking them entirely unless their activity negatively impacts your site.

Google’s special-case crawlers are generally part of initiatives to improve search quality, user experience, or develop new features. While their exact purposes may vary, they usually operate transparently and can be managed effectively through routine monitoring and adherence to best practices.